February 17, 2019; US News & World Report

Decisions made in courtrooms, particularly about sentencing, are difficult and weighty; they have the potential to change the course of someone’s life, often dramatically. So, it makes sense that people making those decisions—usually judges—would want the assistance of a tool that promised to maximize accuracy. But what if the tool itself is merely a product of the very system it seems to correct?

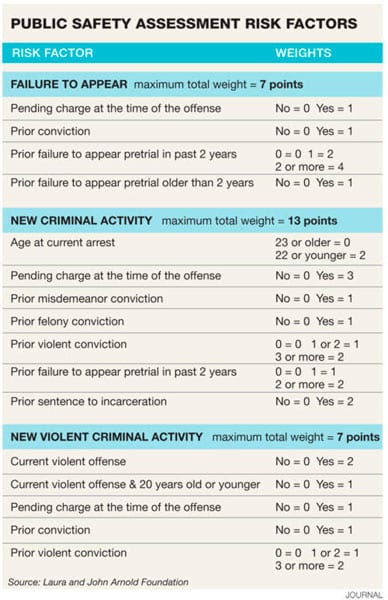

Enter the PSA, or Public Safety Assessment. The PSA was developed by Arnold Ventures, formerly known as the Laura and John Arnold Foundation, and aims to provide “reliable and neutral” information to judges about their defendants in pretrial bail hearings. The PSA measures risk of three behaviors:

- Likelihood to commit another crime

- Likelihood to miss a court appearance

- Likelihood to commit another violent crime

These factors are supposed to help judges determine whether to give the defendant a “signature bond.” Signature bonds allow people to be released without a cash bail if a judge determines they are at low risk for the three factors above. About half a million Americans sit in jail each day without having been convicted of a crime; they are accused, unable to afford bail, and await trial.

Of course, like any decision, these judges’ determinations are subject to bias and human whims. One study found that Israeli judges were likely to give more lenient sentences after lunch. The PSA is supposed to correct for this by providing behavioral predictions based on data.

Sign up for our free newsletters

Subscribe to NPQ's newsletters to have our top stories delivered directly to your inbox.

By signing up, you agree to our privacy policy and terms of use, and to receive messages from NPQ and our partners.

But as NPQ has pointed out before, algorithms are products of human minds and are not free from the biases of their creators. All algorithms do is detect and replicate patterns; patterns, especially in criminal justice, are created by people’s choices. NPQ’s Cyndi Suarez wrote to explain that using algorithms doesn’t remove bias from a decision, it merely exchanges the explicit judgment of a person for an implicit decision to rely on the values that informed the chosen algorithm.

Unfortunately, the values that inform the criminal justice system disproportionately punish people of color and of lower income. A study from ProPublica in 2016 found that pretrial risk assessments grossly exacerbated racial inequalities in bail and sentencing. The PSA says it does not take race into account when calculating risk, but it doesn’t necessarily correct for signifiers of race. (Arnold Ventures also funds ProPublica.) Nick Thieme of the Institute for Innovation Law at the University of California, Hastings, wrote, “Since people of color are more likely to be stopped by police, more likely to be convicted by juries, and more likely to receive long sentences from human judges, the shared features identified are often race or proxies for race.”

The PSA is a relatively new tool, so no conclusive data shows how it specifically affects pretrial outcomes. The Pretrial Justice Institute found that in Yakima County, Washington, pretrial assessments caused release rates for people of color to rise at double the rate they did for white people. But in New York, citizens and advocates did not fully trust the algorithms being used, so they implemented a task force to “examine whether the algorithms the city uses to make decisions result in racially biased policies.”

There seems to be some consensus that community oversight over pretrial risk assessment tools, by members of advocacy nonprofits and affected communities, could help correct for bias. Over 110 organizations signed a letter protesting the use of pretrial risk assessments, saying it would be better to simply focus on ending cash bail. However, the letter urged that if the PSA and other tools were to be used, community input and transparency must be part of the process. Signatories include the ACLU, Black Lives Matter Philadelphia, the Arab American Institute, the Center on Race, Inequality, and the Law at NYU Law, and the National Council of Churches.

While the idea of replacing cash bail with any system that might lead to fewer incarcerations is appealing, relying on algorithms without community monitoring when the jury is still out on their overall merit is not advisable.—Erin Rubin