May 18, 2016; The Verge

NPQ reported last week about recent allegations of liberal bias or outright censorship at Facebook. Specifically, Facebook’s Trending News section could be facing perception problems. However, a recent poll by Morning Consult suggests that user trust in the platform isn’t falling.

The poll, conducted “among a national sample of 2000 registered voters,” wrapped up just days after the bias allegations were published, so public opinion may still be shifting. However, its responses suggested that users won’t avoid reading news on Facebook: Almost 47 percent of respondents said they were “somewhat” or “very” comfortable with social media companies controlling what news appears on their sites, compared with only 34 percent who were “not very comfortable” or “not comfortable.”

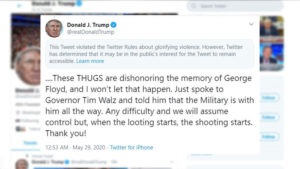

Trending News is a section available on Facebook’s website and mobile app that presents a selection of “trending” headlines. A user’s geographic region, demographics, and interests play into an algorithm (itself an automated process designed by humans and, therefore, potentially biased) to determine which stories are served to that user, but the algorithm doesn’t actually compile the stories. That job belongs to a team of contracted journalists—the news curators—who select which stories make the trending list, write headlines for those stories and choose which sources they’re linked to. Last week, Gizmodo published quotes from former Facebook “news curators” who said that they had suppressed certain stories—allegedly, those with a conservative bent—from appearing in this section and sometimes artificially promoted story lines with a more liberal focus.

The selection of the stories is a multi-part process, governed by a set of guidelines from Facebook, but not an unbiased one. One of the contractors interviewed stated that stories that appeared only on conservative sites were routinely left out of the trending list. Another said that the end goal of the Trending News was user engagement: The curators select content that garners more clicks.

Sign up for our free newsletters

Subscribe to NPQ's newsletters to have our top stories delivered directly to your inbox.

By signing up, you agree to our privacy policy and terms of use, and to receive messages from NPQ and our partners.

Trending News is separate from the News Feed, the main display on Facebook showing activity by friends, liked pages, and advertisers. Trending News’ set-apart location, and the absence of any acknowledgement of the curation process, may have implied that the stories were algorithmically selected, based on which articles were receiving the most readership across Facebook at any given moment. The allegations of bias led to concerns about Facebook’s trustworthiness as a news platform, and calls for increased transparency—see this one by our own Michael Wyland.

The Morning Consult poll suggests that many Facebook users have lower expectations. When asked how social media companies should determine which news stories to show to their readers, 11 percent of respondents said the determination should be based on editor discretion, and 29 percent said that it should be based on a mix of editor discretion and level of reader interest. About one-third said that social media companies should use level of reader interest as the sole determinant. In response to the question, “How do you think social media companies determine which news stories to show to their readers?” only 20% believed that reader interest was the determining factor. Forty-seven percent said that social media companies use editor discretion or a mix of editor discretion and reader interest. Thirty-three percent didn’t respond, or said they didn’t know.

Editor discretion is, of course, not the same as brazenly biased selection. But some users—at least in this poll—don’t expect to be shown a list of “true-trending” stories on social media. They expect human involvement in story curation.

There’s good reason for an expectation of manipulation: Facebook manipulates what we see all the time by changing the content of our News Feeds. Facebook’s algorithms determine which of our friends’ posts appear in our feeds, based on users’ interactions with their friends and a variety of other factors. Previously, those same algorithms have been tweaked for controversial experiments on user behavior and mood. Facebook’s ad targeting shows us products based on our interest (and on sellers’ bids for clicks, likes or impressions). Recently, other news outlets have been taking up Facebook’s offer for shared revenue, and better exposure on Facebook, in exchange for in-app content and videos. News Feed ranking adjustments aren’t always performed by humans like the Trending News curators, but a mix of human ranking and click-driven algorithms ensure users’ News Feeds aren’t ranked by pure chronology or popularity.

Should Facebook present its Trending News for what it is—a list of popular stories, selected by human curators with inevitable biases—in a more transparent manner? Certainly. Do readers actually expect social media platforms to provide unbiased information? Almost certainly not.—Lauren Karch